Book Review: Noise a Flaw in Human Judgment

Published on

Measurement, in everyday life as in science, is the act of using an instrument to assign a value on a scale to an object or event. You measure the length of a carpet in inches, using a tape measure. You measure the temperature in degrees Fahrenheit or Celsius by consulting a thermometer. The act of making a judgment is similar. When judges determine the appropriate prison term for a crime, they assign a value on a scale. So do underwriters when they set a dollar value to insure a risk, or doctors when they make a diagnosis. (The scale need not be numerical: “guilty beyond a reasonable doubt,” “advanced melanoma,” and “surgery is recommended” are judgments, too.) Judgment can therefore be described as measurement in which the instrument is a human mind.

Ten years after “Thinking Fast and Slow”, Daniel Kahneman writes another great book about human judgment and decision making. “Noise: A Flaw in Human Judgment” Together with Cass Sunstein and Olivier Sibony, Kahneman now focuses on how we make noisy decisions in our daily life and in our professional environments.

Noise is variability in judgments that should be identical.

The authors define Noise as “the unwanted variability of judgments” where bias are systematic errors in the same direction. Nowadays we’re very focused on eliminating or at least reducing bias and the book argues that noise is just as important a matter to focus on.

The book is full of examples of noisy decisions in many different fields, including medicine, justice, business and surprisingly (at least for me) fingerprint analysis.

The book is structured as a set of six parts and three very useful appendices. In the first part, the authors define the concept of Noise and how it affects the criminal justice system. The second part introduces a method to measure errors (bias and noise) based on a concept called mean squared error. In the following four parts of the book, the authors use those concepts to analyse noise in many different situations such as project estimation, hiring, feedback cycles and more.

Noise also raises a very important discussion about how algorithms and machine learning systems can produce bias. There is a reference to Cathy O’Neil’s “Weapons of Math Destruction” and the authors argue that improving algorithms is worth the effort.

These examples and many others lead to an inescapable conclusion: although a predictive algorithm in an uncertain world is unlikely to be perfect, it can be far less imperfect than noisy and often biased human judgment. This superiority holds in terms of both validity (good algorithms almost always predict better) and discrimination (good algorithms can be less biased than human judges). If algorithms make fewer mistakes than human experts do and yet we have an intuitive preference for people, then our intuitive preferences should be carefully examined.

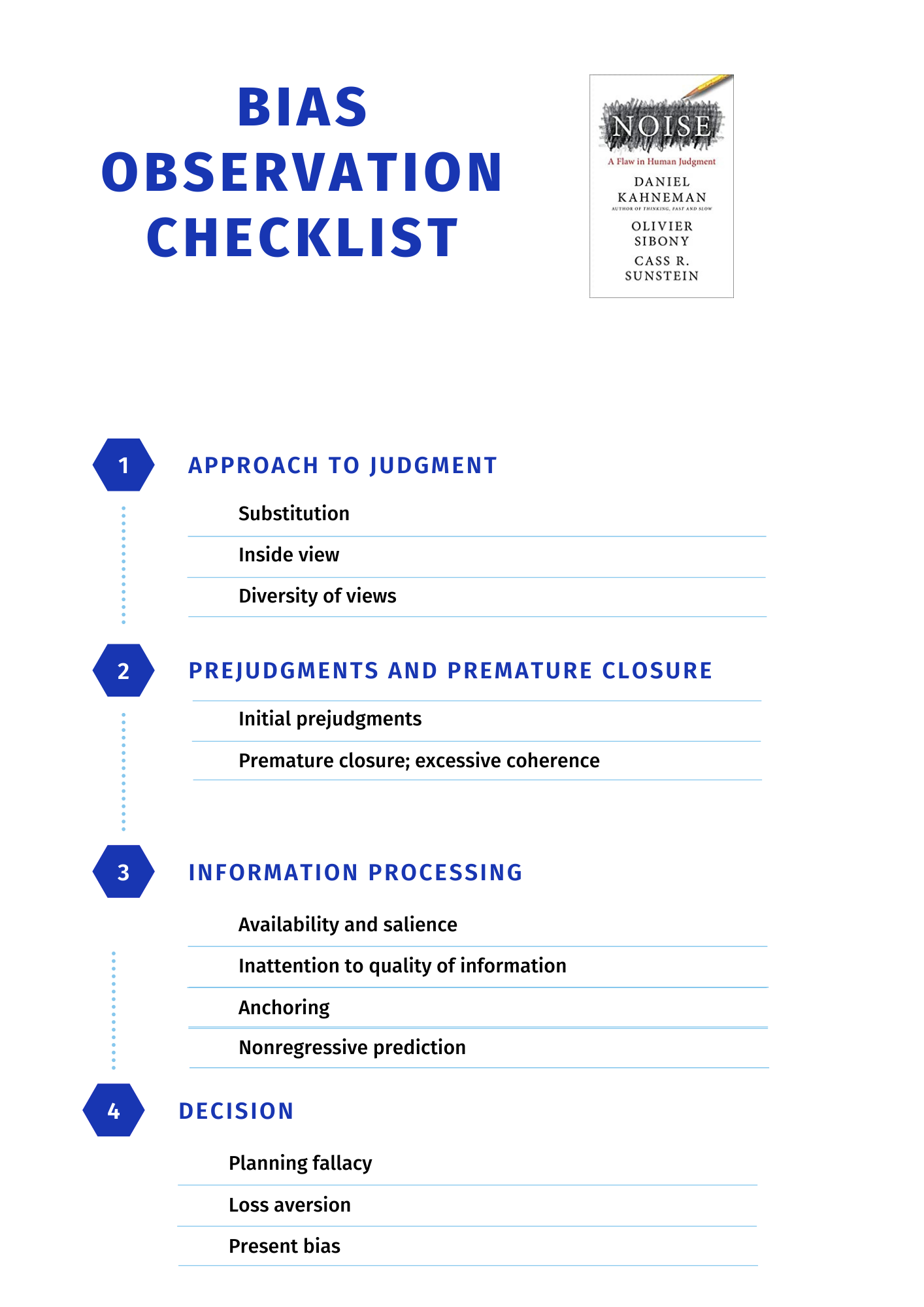

Finally, the appendices offer very practical advice, presenting a set of guidelinies that can be applied in most companies. Appendix A explains how to conduct a Noise audit, so we can understand how much noise is present in the institution’s decision process. Appendix B is a checklist for decision observers (the professional that will help a team to make better decisions), and appendix C tells us how to improve predictions.

How can Error be measured?

- “Oddly, reducing bias and noise by the same amount has the same effect on accuracy.”

- “Reducing noise in predictive judgment is always useful, regardless of what you know about bias.”

- “Predictive judgments are involved in every decision, and accuracy should be their only goal. Keep your values and your facts separate.”

In the XVIII century, Carl Friedrich Gauss and Adrien-Marie Legendre created the method of least squares, a way to rule for scoring the contribution of individual errors to the overall error. This method has applications in many areas, including finance, statistics, and Noise analysis. The authors use this method to get the error equations – that remind us of the Pythagorean theorem:

Error in a single measurement = Bias + Noisy Error

Overall Error (MSE) = Bias² + Noise²

Group Polarization

- “As I always suspected, ideas about politics and economics are a lot like movie stars. If people think that other people like them, such ideas can go far.”

- “I’ve always been worried that when my team gets together, we end up confident and unified — and firmly committed to the course of action that we choose. I guess there’s something in our internal processes that isn’t going all that well!”

- “Group polarization. The basic idea is that when people speak with one another, they often end up at a more extreme point in line with their original inclinations. (…) Internal discussions often create greater confidence, greater unity, and greater extremism, frequently in the form of increased enthusiasm.”

Chapter eight mentions one of the most relevant matters of our time – it analyses what makes some ideas popular and how this can be misused to influence political opinion. The authors also explain how group discussions can make each individual more extremist in their ideas. This chapter reminded me of another book I’ve recently read, called “Rules of Contagion”. Both these readings illuminated my world view an gave me a better understanding of the increasing polarization we see nowadays, and how easily extreme ideas spread on social media.

Understanding: Causal Thinking x Statistical Thinking

“When the authors of the Fragile Families challenge equate understanding with prediction (or the absence of one with the absence of the other), they use the term understanding in a specific sense. There are other meanings of the word: if you say you understand a mathematical concept or you understand what love is, you are probably not suggesting an ability to make any specific predictions.

However, in the discourse of social science, and in most everyday conversations, a claim to understand something is a claim to understand what causes that thing. The sociologists who collected and studied the thousands of variables in the Fragile Families study were looking for the causes of the outcomes they observed. Physicians who understand what ails a patient are claiming that the pathology they have diagnosed is the cause of the symptoms they have observed. To understand is to describe a causal chain. The ability to make a prediction is a measure of whether such a causal chain has indeed been identified. And correlation, the measure of predictive accuracy, is a measure of how much causation we can explain.”

On chapter 12, in my opinion the most interesting chapter of the book, there is a discussion on what means to understand. We usually think about cause and effects, which can be highly misleading when we’re dealing with complex phenomenons. Causal thinking creates stories to explain how specific events affect the outcomes. In the chapter they explain this using an example of an social worker:

To experience causal thinking, picture yourself as a social worker who follows the cases of many underprivileged families. You have just heard that one of these families, the Joneses, has been evicted. Your reaction to this event is informed by what you know about the Joneses. As it happens, Jessica Jones, the family’s breadwinner, was laid off a few months ago. She could not find another job, and since then, she has been unable to pay the rent in full. She made partial payments, pleaded with the building manager several times, and even asked you to intervene (you did, but he remained unmoved). Given this context, the Joneses’ eviction is sad but not surprising. It feels, in fact, like the logical end of a chain of events, the inevitable denouement of a foreordained tragedy.

It’s easy to look for narratives and stories that explains the world, this is the default method that we form our own world view – and it’s also reinforced by stories on the media and how we learn from history. People think in stories, not statistics

“More broadly, our sense of understanding the world depends on our extraordinary ability to construct narratives that explain the events we observe. The search for causes is almost always successful because causes can be drawn from an unlimited reservoir of facts and beliefs about the world. As anyone who listens to the evening news knows, for example, few large movements of the stock market remain unexplained. The same news flow can “explain” either a fall of the indices (nervous investors are worried about the news!) or a rise (sanguine investors remain optimistic!).”

Statistical thinking on the other hand, is a more sophisticated and effortful way of thinking. It requires the energy and attention of System 2 (slow, deliberate thought) and also needs some level of specialized training. It’s applied on scientific research, and it takes into consideration that the world is much more complex than a simple cause and effect process. For the example above, the eviction of Joneses is seen as statistically likely outcome, given prior observations of cases that shares similarity, using statistics and data analysis to do an informed prediction.

How to make better decisions

- “Do you know what specific bias you’re fighting and in what direction it affects the outcome? If not, there are probably several biases at work, and it is hard to predict which one will dominate.”

- “Before we start discussing this decision, let’s designate a decision observer.”

- “We have kept good decision hygiene in this decision process; chances are the decision is as good as it can be.”

Many organizations have as one of their goals debiasing judgments, and in order to achieve that they introduced a series of process and technologies. On chapter 19, the authors discuss about those interventions and explains how we can incorporate them on our own organizations. Decision hygiene is the process for reducing noise in the decision process, and following it’s principles means that you are adopting mechanisms that reduces noises without ever knowing which errors you’re avoiding. Some of those principles are:

- Sequencing information to limit the formation of premature intuitions. Sometimes, less information is better.

- When you get a second person to judge on a decision, he should not be aware of the first judgment.

- Aggregating multiple independent estimates

- Designating a decision observer to identify signs of bias

Important chapters

- Review and Conclusion: Taking Noise Seriously

- Appendix A: How to Conduct a Noise Audit

- Appendix B: A Checklist for a Decision Observer

- Appendix C: Correcting Predictions

- 20 - Sequencing Information in Forensic Science

- 21 - Selection and Aggregation in Forecasting

- 22 - Guidelines in Medicine

- 24 - Structure in Hiring

Bias is a problem and so is noise. It’s a false to hope that becoming aware of the errors we’ll make better decisions, but it is possible to design and create better systems and organizations to do better judgments. Together with Kahneman’s previous work, this is a very important book that is worth to be re-read from time to time for it’s practical and philosophical value.

Further readings

Superforecasting: The Art and Science of Prediction

Philip Tetlok

Thinking Fast and Slow

Daniel Kahneman

Mindware: Tools for Smart Thinking

Richard Nisbett

Misbehaving: The Making of Behavioral Economics

Richard Thaler

You are about to make a terrible mistake

Richard Thaler

Weapons of Math Destruction

Cathy O'Neil

- Best Books on Behavioral Economics

- Daniel Kahneman on Princeton

- Noise: How to Overcome the High, Hidden Cost of Inconsistent Decision Making by Daniel Kahneman, Andrew M. Rosenfield, Linnea Gandhi, and Tom Blaser

- #68 Putting Your Intuition on Ice with Daniel Kahneman

- A Chat with Daniel Kahneman

- Bias and Noise: Daniel Kahneman on Errors in Decision-Making

- Bias Is a Big Problem. But So Is ‘Noise.’ by Daniel Kahneman